C++11 saw a bunch of changes to template handling that overall are fairly minor. The first two will I cover allow what seem fairly obvious things that I am sure most C++ programmers attempted when they were learning the language. The third is not really a language change in itself, but more of a method to help the compiler and linker do less work.

Right-angle Brackets

When specifying a template within a template, there is no longer a need to put a space between the “>“s. For example, a poor man’s matrix can now be specified as:

std::vector<std::vector<double>>

Not a big feature by any means, but a great improvement!

Template Aliases

There are limitations with typedef and templates. Specifically, typedef can only be used to define fully qualified types, so does not work in cases where you want to partially specialize a template. The standard example (as in, straight from the Standard) is if you want to provide your own allocator for a std::vector. Now you can define a type for that using:

template<class T>

using Vec = std::vector<T, Alloc<T>>;

Vec<int> v; // same as std::vector<int, Alloc<int>> v;

Note that ordinary type aliases can be declared using the using syntax instead of typedef, and in my opinion is a far nicer syntax.

Extern Templates

To understand this feature, you first need to have some idea about how C++ compilers deal with templates. Lets see if I can explain this correctly… Consider this code snippet:

Foo<int> f;

f.bar();

When the compiler reaches the first line, it does a implicit initialization of the constructor (and destructor) of Foo<int> (if it has not been done previously in this translation block). That is, it creates the code for the int version of Foo. Generating the code on usage makes sense with templates as we do not know what types will be used when we declare the class (hence the use of templates…). The second line accesses the bar() method of Foo<int> and so that function gets implicitly initialized.

A disadvantage of implicit initialization is that we would have to use every method to let the compiler catch issues when using a class with a particular type. Also, the initialize a bit here and there approach might be slower. To work around this we can do an explicit initialization of the class:

template class Foo<int>;

The disadvantage of this is that if you class has a method that is not usable for a particular type (e.g. due to a class method requiring an operator that is not implemented for the type), then an explicit initialization will try to initialize that method and fail. With implicit initialization, the methods are initialized as called and so the unusable method is never encountered by the compiler. This is all in C++03.

OK… more details about the compiler. What happens when we have two translation units that are compiled separately then linked together that both use the Foo<int>? At compile time, the compiler has no idea that both these files use the that class, so initializes it for both files as needed. Not only is this a waste of compiler time, but then the linker spots these two identical initializations and strips one out (ideally…), so it wastes linker time too. C++11 provides a way to deal with this. In one source file, Foo<int> gets explicitly initialized. Then in all remaining source files that link with this, template initialization can be suppressed using:

extern template class Foo<int>;

One potential use for this is creating a shared library. If you know the finite set of types your template class/function is going to be used for, you can provide a header with just the declarations and the required extern lines. In the library source, you provide the definitions and explicitly initialize them. That way, any users of your library will just have to include the header and they are done. The compiler will automatically not implicitly initialize anything.

Ignoring everything I had said in the

Ignoring everything I had said in the  Meet our hero, Milo Steamwitz. He knows exactly how he is going to make his fortune, but needs to generate some money to invest first. So it is off to some remote planet where large crystals are just lying about in caves waiting to be harvested. You start off in some sort mine shaft that provides access to 16 caves to be explored. In each cave you have to run around, jumping between platforms, flicking switches on and collecting all the crystals while avoiding various obstacles and shooting aliens. Each cave has a bit of a theme to it, with some having continuously falling rocks to avoid and others having “low gravity” (which does not let you jump higher, but does mean you get forced back whenever you shoot – interesting…). Once all the crystals are collected, the exit door unlocks.

Meet our hero, Milo Steamwitz. He knows exactly how he is going to make his fortune, but needs to generate some money to invest first. So it is off to some remote planet where large crystals are just lying about in caves waiting to be harvested. You start off in some sort mine shaft that provides access to 16 caves to be explored. In each cave you have to run around, jumping between platforms, flicking switches on and collecting all the crystals while avoiding various obstacles and shooting aliens. Each cave has a bit of a theme to it, with some having continuously falling rocks to avoid and others having “low gravity” (which does not let you jump higher, but does mean you get forced back whenever you shoot – interesting…). Once all the crystals are collected, the exit door unlocks. I had played the first episode many times when I was younger so I zoomed through to the end quite quickly. For each level there is a key that you can collect which allows you to open all the treasure chests scattered throughout the level, but having no siblings around to eliminate from the high-score board, my motivation to do so was limited… At the end of the first episode, Milo sells up his collected crystals and invests in a Twibble farm. It turns out that Twibbles are prolific at eating and breeding so the planets resources are soon used up. Also, no-one wants to buy Twibbles any more, so I guess he just abandoned them all to die of starvation.

I had played the first episode many times when I was younger so I zoomed through to the end quite quickly. For each level there is a key that you can collect which allows you to open all the treasure chests scattered throughout the level, but having no siblings around to eliminate from the high-score board, my motivation to do so was limited… At the end of the first episode, Milo sells up his collected crystals and invests in a Twibble farm. It turns out that Twibbles are prolific at eating and breeding so the planets resources are soon used up. Also, no-one wants to buy Twibbles any more, so I guess he just abandoned them all to die of starvation. The second and third episodes are very similar to the first. I think there is a slight difficulty increase, but it is hard to judge given how much I had played the first episode previously. The difference I did notice was that a lot of levels required you to do the crystal collecting for different sections in a defined order. There were many places where the only way to go back to collect a crystal you missed was to die and restart the level. What is worse, there is a bug in the third game where in the mine shaft there is an area where you can not escape (pictured). So the two levels there must be left until last, otherwise you have to restart the game.

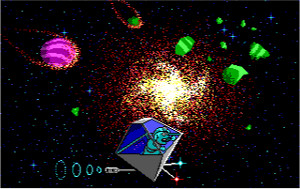

The second and third episodes are very similar to the first. I think there is a slight difficulty increase, but it is hard to judge given how much I had played the first episode previously. The difference I did notice was that a lot of levels required you to do the crystal collecting for different sections in a defined order. There were many places where the only way to go back to collect a crystal you missed was to die and restart the level. What is worse, there is a bug in the third game where in the mine shaft there is an area where you can not escape (pictured). So the two levels there must be left until last, otherwise you have to restart the game. The final episode see Milo giving up on farming. Instead he wants to buy a solar system to set up a vacation resort based on some perfectly legitimate sounding scheme he saw on TV. Sure enough, once he signs the contract, the whole solar system gets destroyed in a supernova (I bet you could have never guessed that would happen from the game title…). Luckily, the supernova left a nice looking backdrop for a space burger joint. It is now quite popular and Milo can sell his burgers at a price that looks expensive even accounting for inflation.

The final episode see Milo giving up on farming. Instead he wants to buy a solar system to set up a vacation resort based on some perfectly legitimate sounding scheme he saw on TV. Sure enough, once he signs the contract, the whole solar system gets destroyed in a supernova (I bet you could have never guessed that would happen from the game title…). Luckily, the supernova left a nice looking backdrop for a space burger joint. It is now quite popular and Milo can sell his burgers at a price that looks expensive even accounting for inflation.